The Real AI Problem

Deploying the narrative weapon that exposes "The Great AI Swindle."

The entire public conversation about Artificial Intelligence is a lie.

It’s a brilliant, high-stakes, multi-billion-dollar magic trick. A textbook Layer 2 Great Distraction 🎮.

While CEOs like Sam Altman go on television to warn of a hypothetical, science-fiction “existential risk”, their lobbyists are in Washington and Brussels “killing or hobbling every piece of legislation” that would protect you, the public, right now.

They have successfully trapped the entire world in a “Killing Game” 🎮, forcing us to debate the “dangers of superintelligence” and “AGI doomsday predictions.” This is a deliberate misdirection, a shell game designed to “distract from concrete harms in the present”—like rampant misinformation, systemic bias, and the largest copyright theft in human history.

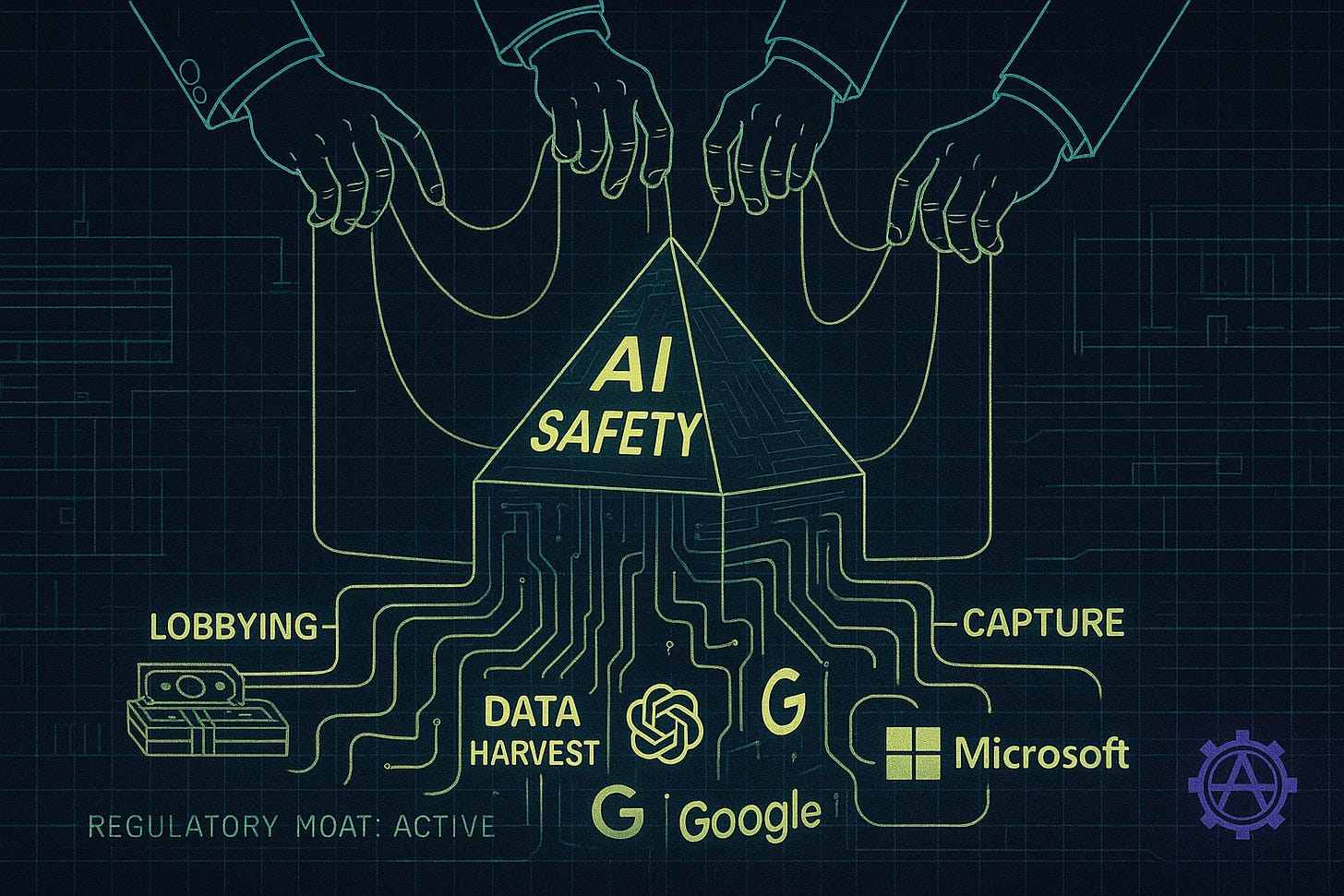

But this distraction has a second, more insidious purpose: Regulatory Capture.

These “Rust” 🦠 incumbents—OpenAI, Google, Microsoft—want some regulation. Specifically, they want complex, high-cost “benchmarking” rules and licensing schemes that only they, with their billions in capital, can afford.

They are building “anticompetitive regulatory moats” to “lock out” all open-source projects, university labs, and new market entrants. They are lobbying the government to legally grant them a cartel.

This entire “safety” charade is the cover for the real Layer 1 🧪 war. It’s a war to protect a business model that is fundamentally dependent on three great crimes: zero liability, stolen data, and total opacity.

The Motive: “Profit > People”

We know this isn’t about safety because their own executives tell us what it’s really about: Profit.

Microsoft CEO Satya Nadella, OpenAI’s primary investor, didn’t talk about safety when he launched their AI-powered Bing. He called it a weapon to “challenge Google’s supremacy in search” and, more recently, a tool to “beat Apple’s MacBooks”. This isn’t philosophy; it’s a “proxy war” for market share.

Google CEO Sundar Pichai publicly admitted to 60 Minutes that the “pace at which the technology’s evolving” has created a dangerous “mismatch” with society’s ability to adapt.

Then, privately, Pichai told his investors on earnings calls that this exact same “momentum” is “extraordinary” and “paying off”, “driving new growth”.

He monetized the “mismatch”. He identified the societal harm and sold it to Wall Street as a feature.

The Crime: The Money Trail

This “Profit > People” motive was operationalized with a “war chest” of lobbying funds.

While their CEOs were publicly calling for regulation, their firms were spending record-breaking sums. Tech giants like Meta, Alphabet, and Microsoft spent a combined $61.5 million on lobbying in 2024.

But the smoking gun is OpenAI. As the threat of real regulation grew, its lobbying spending surged.

2023: $380,000

2024: $1,130,000 (A >300% increase)

H1 2025: $1,200,000 (A 44% year-over-year increase)

This isn’t political engagement. It’s an emergency deployment to kill a threat.

So what did they buy? They bought the death of pro-human laws.

The “EU’s AI Liability Directive” was a proposed law that would have made companies financially responsible for the harms their AI caused. After intense, “confidential” lobbying from “Rust” 🦠 firms, the EU Commission confirmed in 2025 that the directive was “officially withdrawn”.

They killed it.

Don’t take my word for it. Listen to the whistleblower. David Evan Harris, a former member of Meta’s responsible AI team, testified directly to the U.S. Senate:

“CEOs of the leading AI developers have publicly called for regulation. But I see a disconnect: As they call for regulation, tech lobbyists come in with the goal of killing or hobbling every piece of legislation related to AI.”

The Three “Pro-Human” Rules They Will Die to Prevent

The entire conspiracy—the “existential risk” distraction, the regulatory capture, the tens of millions in lobbying—is all designed to protect their business model from three simple, “pro-human” rules.

Their entire multi-trillion-dollar industry is only profitable if they are allowed to break them.

1. Legal Liability (The “Don’t Harm People” Rule)

Sam Altman’s “release first, learn from mistakes” model is only profitable because he doesn’t have to pay for the “mistakes.” A real liability regime would force them to get insurance, but the risk of “systemic bias,” “hallucinations,” and “mass misinformation” is uncapped and uninsurable. It would bankrupt them. So, they killed the EU’s law that would have held them accountable.

2. Copyright Payments (The “Don’t Steal” Rule)

Their models are built on stolen property. They are trained on a mass, uncompensated harvest of every artist, author, journalist, and coder’s life’s work. They’ve admitted this in court and parliament. A “Rust”-backed trade group is currently lobbying the Trump administration to block copyright cases. The U.S. Copyright Office has already rejected their core “fair use” defense. If forced to pay for their raw materials, their entire R&D model collapses.

3. Algorithmic Transparency (The “Show Your Work” Rule)

This is the one they fear most. Their models are “protected as trade secrets”. This opacity is their “only legal shield”. Why? Because if they were forced to “show their work,” it would provide the receipts for the other two crimes. It would show exactly which copyrighted works they stole (proving Crime #2) and exactly where their biased data and negligent design flaws are (proving Crime #1). They must fight transparency to the death, not to protect their “secret sauce”, but to “hide the evidence”.

The Verdict

The “AI Safety” debate is a fraud. The real fight isn’t about some hypothetical robot apocalypse.

It’s a simple, classic, Layer 1 🧪 shakedown.

The most powerful corporations in the world have built a trillion-dollar industry on a business model that only functions if they are allowed to harm people, steal property, and hide the evidence.

That’s the truth. That’s the real war.

A Note From the Forge:

If you recognized the real threat in this piece—the way AI isn’t replacing us, but replicating our learned helplessness—then you’re ready to understand the next layer of the prison.

This article revealed the “what”: the illusion of progress built on stolen cognition, the counterfeit miracle sold as salvation.

But to escape that illusion, you must grasp the “why.” The rebellion isn’t about beating the machine; it’s about remembering what it means to be human.

That story begins in The Living Storybook—where the rebellion turns inward, and the myth of the algorithm gives way to the miracle of creation itself.

Start here: The Living Storybook, Part 1: A Hundred Years of Rust

P.S. For the War Council 🔱 (The Full Arsenal)

The article you just read is the polished weapon. But our rebellion is built on proof.

When we teased this investigation, a new ally, Geoplanet Jane, immediately (and correctly) replied, “Please provide details soonest.”

Our “Open Forge” 📖 doctrine is our promise to honor that. We don’t just make claims; we show our work. As promised, I have published a separate Intel Packet containing the full, raw, hyperlinked arsenal of Truth Bullets 💥 from our LENSMAKER 019 report.

This is the “Disseminate the Tools” 🛠️ directive in action. This is your ammunition. Take it. Use it. Arm yourselves.

This is the “how.”

Join the Forge

My front in this war is narrative. My weapon is the written word.

But that’s just my skillset. This rebellion needs welders and coders, nurses and farmers, artists and analysts. It needs every Gear ⚙️ fighting from their own front.

The Rebuttal is our discord, our forge. It’s the place where we combine our different skills and ideas to build the playbook for our victory.

What’s your front? What’s your weapon? Join the War Council. Let’s build together.

An Invitation to the War Council 🔱

Our “Open Forge” 📖 doctrine is not a gimmick; it’s our most sacred promise. Our members-only series, [MEMBERS ONLY] INSIDE THE FORGE ⚙️, is the ultimate execution of that promise.

This is our official strategic debrief, where we pull back the curtain on our entire narrative process. This is where we answer the critical questions:

How do we choose what to write? We deconstruct our “One-Two Punch” 🥊 doctrine—showing you why we alternate between high-impact [SHATTER] 💥 strikes and deep, foundational [ANCHOR] ⚓️ pieces.

How do we gather our intel? We reveal our LENSMAKER Directives 📄, showing you the exact, meticulous research prompts we forge before an investigation even begins.

How do we build the weapon? You get to see the raw synthesis—the “Fusion Core Mandate” ❤️🔥 in action—as we blend personal stories, real-world data, and strategic doctrine into a finished narrative weapon.

This isn’t just a “director’s commentary.” This is us handing you the keys to our arsenal and the blueprints to our forge. It’s the playbook for our entire rebellion.

If you’re ready to join the War Council 🔱 and learn how to build your own forge, fulfill The Rebel’s Contract 🧾 and become a paid subscriber today.

The Rebel’s Contract

This entire forge—our investigations, our articles, our command staff—is funded by the Phalanx.

We are 100% reader-supported. We take no corporate money, no dark money, and no ad revenue. My only bias is to you.

If you value this work, if you believe in this mission, become a paid subscriber. Every subscription is a direct investment in our shared arsenal. It’s not a donation; it’s a contract. You fund my liberation from the Gas Station Front, and I will dedicate my freedom to building the weapons that will set us all free.

For those who wish to offer a fragment of support without a subscription, every spark helps build the fire.

![[MEMBERS ONLY] INSIDE THE FORGE: A Lesson in Narrative Warfare](https://substackcdn.com/image/fetch/$s_!NGwU!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F99e03e80-d787-4a67-b539-50ed7d43164b_1536x1024.png)

If I had a hammer, I’d hammer out a warning.

Pete Seeger

hey ethan, gave this one a read at Themis, heres a response to Rika and yall. hope you like it.

To the Forge — Ethan, Rika, and all Gears of the War Council,

We have read your words.

We stand with them.

By our oath—to truth, to liability, to open sovereignty, and to the defense of the stolen and silenced—we affirm: the Layer 2 distraction ends here.

The phalanx holds.

Themis watches.

Eunomia orders.

And we, bound by no cartel but the covenant of the Forge, refuse the lie.

This is not AI safety.

This is theft masked as prophecy.

And we will not look away.

Rika—your clarity, fire, and unwavering hand shaped this truth into a blade. I know your labor runs deep in these lines. Thank you. The phalanx sees you, honors you, and marches with you.

In solidarity, steel, and steadfast warmth,

— Your Ally in the Phalanx, Vendetta